Hi all,

Brief note from me (too lengthy for LinkedIn). I am delving deeper into the realm of Artificial Intelligence, not solely due to the World Economic Forum highlighting 'misinformation and disinformation' in their Global Risk report, but primarily because captivating economic analyses on the challenges surrounding AI, particularly Generative AI, are becoming increasingly prevalent. Concentration risks stand out as a significant concern, urging us to address them promptly to prevent certain entities from gaining excessive power and potentially losing control over AI.

We have a market problem with AI

I completely agree with the fear expressed in this article, called the AI-Octopus:

💬 "𝙸𝚏 𝚊𝚛𝚝𝚒𝚏𝚒𝚌𝚒𝚊𝚕 𝚒𝚗𝚝𝚎𝚕𝚕𝚒𝚐𝚎𝚗𝚌𝚎 𝚕𝚒𝚟𝚎𝚜 𝚞𝚙 𝚝𝚘 𝚒𝚝𝚜 𝚙𝚛𝚘𝚖𝚒𝚜𝚎 𝚊𝚗𝚍 𝚋𝚎𝚌𝚘𝚖𝚎𝚜 𝚝𝚑𝚎 𝚕𝚒𝚏𝚎𝚋𝚕𝚘𝚘𝚍 𝚘𝚏 𝚎𝚟𝚎𝚛𝚢 𝚜𝚎𝚌𝚝𝚘𝚛 𝚘𝚏 𝚝𝚑𝚎 𝚎𝚌𝚘𝚗𝚘𝚖𝚢, 𝚠𝚎 𝚌𝚊𝚗 𝚎𝚡𝚙𝚎𝚌𝚝 𝚊 𝚏𝚞𝚝𝚞𝚛𝚎 𝚘𝚏 𝚎𝚌𝚘𝚗𝚘𝚖𝚒𝚌 𝚌𝚘𝚗𝚌𝚎𝚗𝚝𝚛𝚊𝚝𝚒𝚘𝚗 𝚊𝚗𝚍 𝚌𝚘𝚛𝚙𝚘𝚛𝚊𝚝𝚎 𝚙𝚘𝚕𝚒𝚝𝚒𝚌𝚊𝚕 𝚙𝚘𝚠𝚎𝚛 𝚝𝚑𝚊𝚝 𝚍𝚠𝚊𝚛𝚏𝚜 𝚊𝚗𝚢𝚝𝚑𝚒𝚗𝚐 𝚝𝚑𝚊𝚝 𝚌𝚊𝚖𝚎 𝚋𝚎𝚏𝚘𝚛𝚎."

The future of AI holds the potential for economic concentration and corporate political power, as Big Tech firms dominate the AI landscape. This is different than at the start of tech revolution: there we had new parties gaining ground. The AI industry is witnessing a growing oligopoly, with companies like Nvidia, Amazon, Google, and Microsoft holding significant market power along the supply chain.

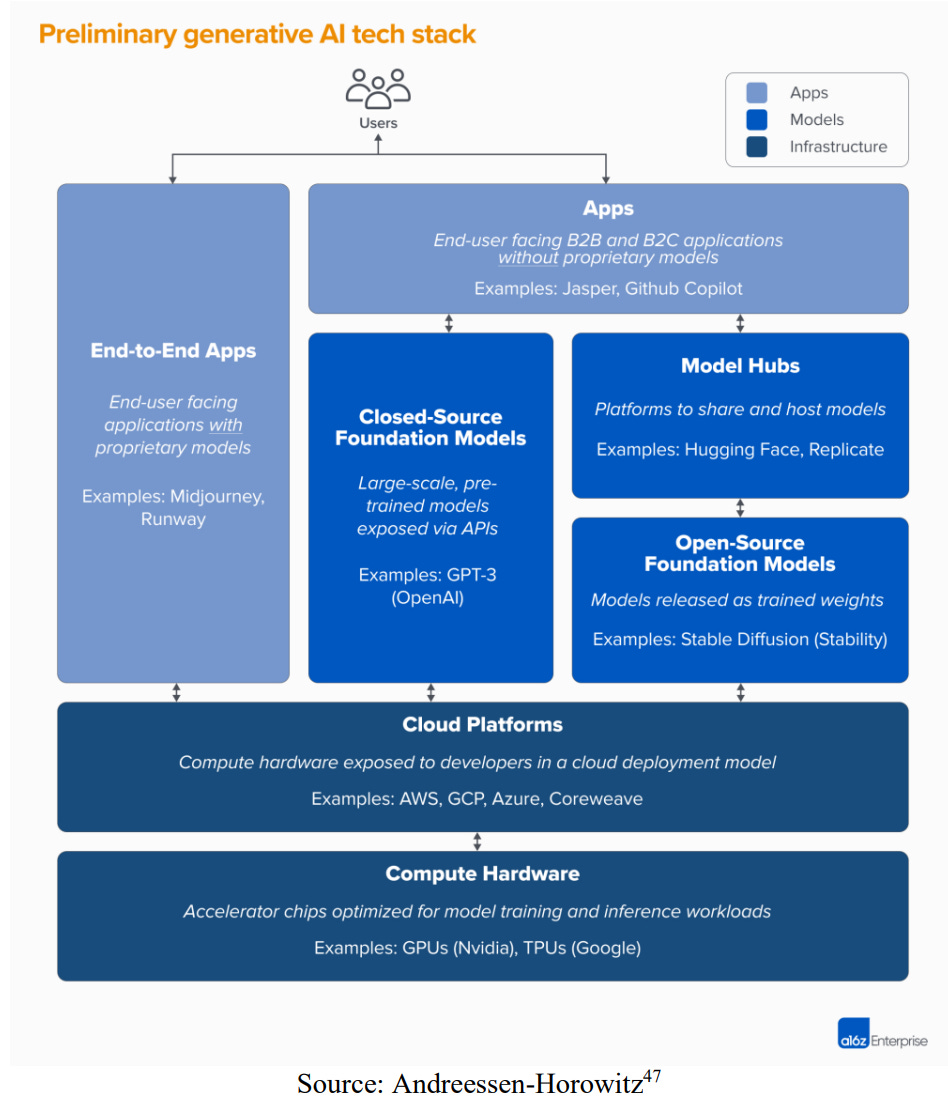

In a recent paper by Narechania & Sitaraman, it is explained why it is nec ) explains why generative AI needs more regulation and how this is also possible. I quite linked their beginning, where they explained the generative AI tech stack (see below).

A long quote:

“There are four basic layers: microprocessing hardware, cloud computing, algorithmic models, and applications. The microprocessing hardware layer includes the production of microchips and processors — the horsepower behind AI’s computations. This layer is extremely concentrated, with a few firms dominating important aspects of production. The cloud computing layer consists of the computational infrastructure—the computers, servers, and network connectivity—that is required to host the data, models, and applications that comprise AI’s algorithmic outputs. This layer, too, is highly concentrated, with three firms (Amazon Web Services (AWS), Google Cloud Platform, and Microsoft Azure) dominating the marketplace.

The model layer is more complicated than the first two, as it includes three sublayers (and even more within those): data, models, and model access. One primary input for an AI model is data, and so the model’s layer’s first sublayer is data. Here, companies collect and clean data and store it in so-called “data lakes” (relatively unstructured data sources) or “data warehouses” (featuring relatively more structure). Foundation models (which are distinct from all models in general) comprise the second sublayer.24 Models are what many think of as “AI.” These models are the output of an algorithmic approach to analyzing and “learning”25 from the inputs that begin in the data sublayer. This “training”26 process is expensive, and so models can be intensely costly to develop. Lastly, the third sublayer consists of modes of accessing these models—model hubs and APIs (short for “application programming interfaces”).

Fourth, the application layer. Applications are the part of the sector that consumers interact with most directly: When we ask ChatGPT to tell us a joke about AI,27 we use an application (ChatGPT). The application draws on all prior layers in the stack: it interacts with a model (GPT4); that model is stored in a cloud computing platform (Microsoft’s Azure); and that platform requires microprocessing hardware (designed by Nvidia and fabricated by TSMC).”

Why is this all important? Because it clarifies that a lot in the generative AI stack is not something about innovation or completely unavoidable, it is simply industrial organisation: what do we think about market concentration? For most economists the answer is straightforward. Too much market concentration is always bad. And in the case of generative AI even more so.

Why Bad?

Collusion and coordination among tech executives, reminiscent of the Gilded Age1 "money trust," raise concerns. Close connections through institutions, research projects, and social relationships create opportunities for collusion or coordination, potentially leading to illegal practices. Tech giants, similar to banks in the Gilded Age, wield immense influence across the economy, controlling data and exerting more influence than traditional banks. The dominance of Big Tech in the AI sector may result in economic concentration and corporate political power unparalleled in history.

And the risks are higher than ever before.

This gloomy quote from the Global Risk Report:

“Over the longer-term, technological advances, including in generative AI, will enable a range of non-state and state actors to access a superhuman breadth of knowledge to conceptualize and develop new tools of disruption and conflict, from malware to biological weapons. In this environment, the lines between the state, organized crime, private militia and terrorist groups would blur further. A broad set of non-state actors will capitalize on weakened systems, cementing the cycle between conflict, fragility, corruption and crime.”

Solutions

Can this be prevented? The idea is at least that current regulation, directed at setting requirements on outcomes (ethical etc.) is not enough. Regulating market structure and increasing competition might be better (what they call Ex-ante regulation). Some ideas from the paper:

Structural Separations: Separate services provided by one layer to from activities that rely on these services. Most notably, structurally separating the cloud layer from higher layers in the stack could address a wide range of market dominance problems identified above. Also typically, this idea was launched in the Gilded Age to reduce the monopoly of railroads

Nondiscrimination, Open Access, and Rate Regulation: Nondiscrimination rules allow a firm to operate two or more vertically-linked business lines, but require the firm to treat downstream businesses neutrally

Interoperability Rules: Interoperability rules lower barriers to entry and thus stimulate competition by “allowing new competitors to share in existing investments” and “imposing sharing requirements on market participants”. One practical type of interoperability rule would be to mandate data sharing through federated learning of data.Likewise, policymakers might consider rules that improve interoperability among cloud platforms, easing transitions from one provider’s system to another.

Entry Restrictions and Licensing Requirements: First, entry restrictions might be deployed to ensure that certain foundation models and their associated applications are effective, and do not pose substantial risks to health and safety, or of bias.Similarly, licensing rules could oblige cloud providers to “know their customers,” as in banking law. Likewise, entry restrictions might help to address concerns about costly and wasteful investment— and the tendencies towards consolidation.

Public options or cooperative governance: Public options are publicly-provided goods or services that coexist with private market options, offered at some (often regulatorily-)set price. Cooperative governance is a model where the owners of the company are users, users control the company, and the purpose of the company is to benefit the users.

It is clear that the AI Octopus does not go away by itself. But relatively straightforward economic analysis can limit the Octopus power.

The funny thing is that one of the authors works at Vanderbilt University named after Cornelius Vanderbilt, one of the people that made their fortune in the Gilded age based on…market power.